SaQC#

- class SaQC(data=None, flags=None, scheme='float')[source]#

Bases:

FunctionsMixinAttributes Summary

Dictionary of global attributes of this dataset.

Methods Summary

align(field, freq[, method, order, overwrite])Convert time series to specified frequency.

andGroup(field[, group, target, flag])Logical AND operation for Flags.

assignChangePointCluster(field, stat_func, ...)Label data where it changes significantly.

assignKNNScore(field, target[, n, func, ...])Score datapoints by an aggregation of the distances to their k nearest neighbors.

assignLOF(field, target[, n, freq, ...])Assign Local Outlier Factor (LOF).

assignRegimeAnomaly(field, cluster_field, spread)A function to detect values belonging to an anomalous regime regarding modelling regimes of field.

assignUniLOF(field[, n, algorithm, p, ...])Assign "univariate" Local Outlier Factor (LOF).

assignZScore(field[, window, norm_func, ...])Calculate (rolling) Zscores.

calculatePolynomialResiduals(field, window, ...)Fits a polynomial model to the data and calculate the residuals.

calculateRollingResiduals(field, window[, ...])Calculate the diff of a rolling-window function and the data.

clearFlags(field, **kwargs)Assign UNFLAGGED value to all periods in field.

concatFlags(field[, target, method, invert, ...])Project flags/history of

fieldtotargetand adjust to the frequeny grid oftargetby 'undoing' former interpolation, shifting or resampling operationscopy([deep])copyField(field, target[, overwrite])Make a copy of the data and flags of field.

correctDrift(field, maintenance_field, model)The function corrects drifting behavior.

correctOffset(field, max_jump, spread, ...)- type field:

str

correctRegimeAnomaly(field, cluster_field, model)Function fits the passed model to the different regimes in data[field] and tries to correct those values, that have assigned a negative label by data[cluster_field].

dropField(field, **kwargs)Drops field from the data and flags.

fitLowpassFilter(field, cutoff[, nyq, ...])Fits the data using the butterworth filter.

fitPolynomial(field, window, order[, ...])Fits a polynomial model to the data.

flagByClick(field[, max_gap, gui_mode, ...])Pop up GUI for adding or removing flags by selection of points in the data plot.

flagByGrubbs(field, window[, alpha, ...])Flag outliers using the Grubbs algorithm.

flagByScatterLowpass(field, window, thresh)Flag data chunks of length

windowdependent on the data deviation.flagByStatLowPass(field, window, thresh[, ...])Flag data chunks of length

windowdependent on the data deviation.flagByStray(field[, window, min_periods, ...])Flag outliers in 1-dimensional (score) data using the STRAY Algorithm.

flagByVariance(field, window, thresh[, ...])Flag low-variance data.

flagChangePoints(field, stat_func, ...[, ...])Flag values that represent a system state transition.

flagConstants(field, thresh, window[, ...])Flag constant data values.

flagDriftFromNorm(field, window, spread[, ...])Flags data that deviates from an avarage data course.

flagDriftFromReference(field, reference, ...)Flags data that deviates from a reference course.

flagDummy(field, **kwargs)Function does nothing but returning data and flags.

flagGeneric(field, func[, target, flag])Flag data based on a given function.

flagIsolated(field, gap_window, group_window)Find and flag temporal isolated groups of data.

flagJumps(field, thresh, window[, ...])Flag jumps and drops in data.

flagLOF(field[, n, thresh, algorithm, p, flag])Flag values where the Local Outlier Factor (LOF) exceeds cutoff.

flagMAD(field[, window, z, min_residuals, ...])Flag outiers using the modified Z-score outlier detection method.

flagMVScores(field[, trafo, alpha, n, func, ...])The algorithm implements a 3-step outlier detection procedure for simultaneously flagging of higher dimensional data (dimensions > 3).

flagManual(field, mdata[, method, mformat, ...])Include flags listed in external data.

flagMissing(field[, flag, dfilter])Flag NaNs in data.

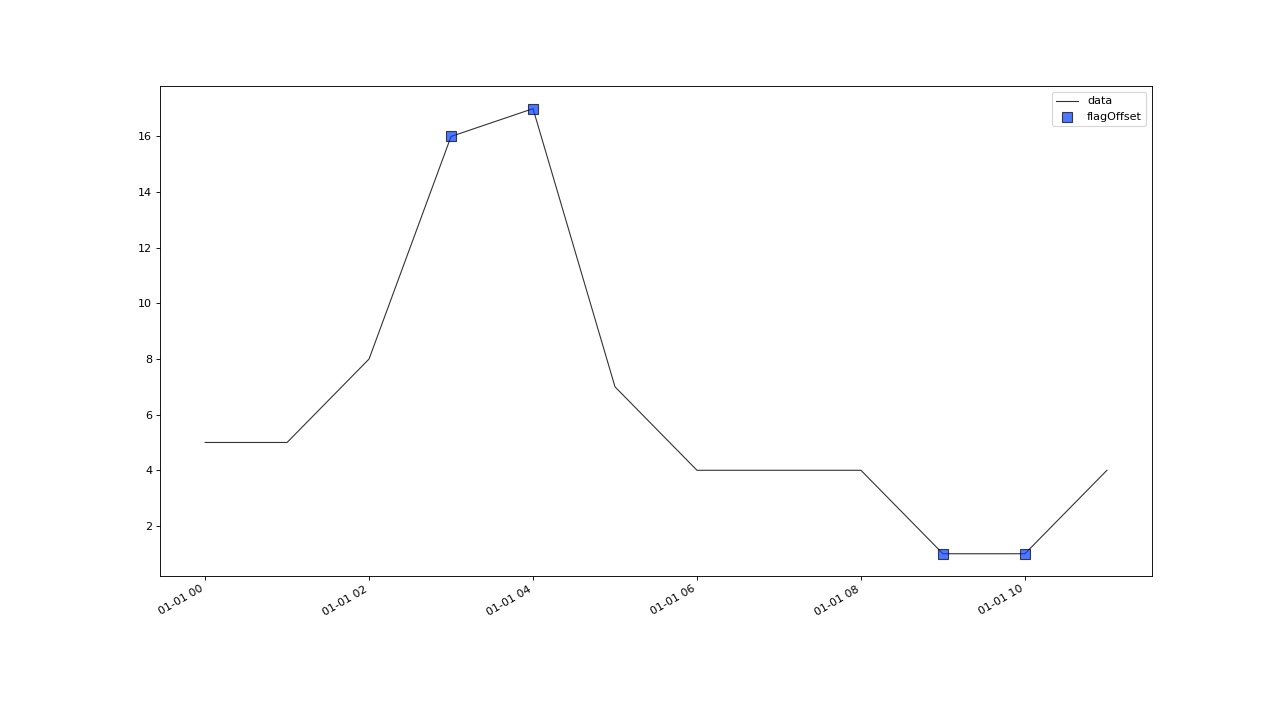

flagOffset(field, tolerance, window[, ...])A basic outlier test that works on regularly and irregularly sampled data.

flagPatternByDTW(field, reference[, ...])Pattern Recognition via Dynamic Time Warping.

flagRaise(field, thresh, raise_window, freq)The function flags raises and drops in value courses, that exceed a certain threshold within a certain timespan.

flagRange(field[, min, max, flag])Function flags values exceeding the closed interval [

min,max].flagRegimeAnomaly(field, cluster_field, spread)Flags anomalous regimes regarding to modelling regimes of

field.flagUnflagged(field[, flag])Function sets a flag at all unflagged positions.

flagUniLOF(field[, n, thresh, algorithm, p, ...])Flag "univariate" Local Outlier Factor (LOF) exceeding cutoff.

flagZScore(field[, method, window, thresh, ...])Flag data where its (rolling) Zscore exceeds a threshold.

forceFlags(field[, flag])Set whole column to a flag value.

interpolateByRolling(field, window[, func, ...])Replace NaN by the aggregation result of the surrounding window.

orGroup(field[, group, target, flag])Logical OR operation for Flags.

plot(field[, path, max_gap, mode, history, ...])Plot data and flags or store plot to file.

processGeneric(field, func[, target, dfilter])Generate/process data with user defined functions.

propagateFlags(field, window[, method, ...])Flag values before or after flags set by the last test.

reindex(field, index[, method, tolerance, ...])Change a variables index.

renameField(field, new_name, **kwargs)Rename field in data and flags.

resample(field, freq[, func, method, maxna, ...])Resample data points and flags to a regular frequency.

rolling(field, window[, target, func, ...])Calculate a rolling-window function on the data.

selectTime(field, mode[, selection_field, ...])Realizes masking within saqc.

setFlags(field, data[, override, flag])Include flags listed in external data.

transferFlags(field[, target, squeeze, ...])Transfer Flags of one variable to another.

transform(field, func[, freq])Transform data by applying a custom function on data chunks of variable size.

Attributes Documentation

- attrs#

Dictionary of global attributes of this dataset.

- columns#

- data#

- flags#

- scheme#

Methods Documentation

- align(field, freq, method='time', order=2, overwrite=False, **kwargs)#

Convert time series to specified frequency. Values affected by frequency changes will be inteprolated using the given method.

- Parameters:

field (str | list[str]) – Variable to process.

freq (

str) – Target frequency.method (

str(default:'time')) –Interpolation technique to use. One of:

'nshift': Shift grid points to the nearest time stamp in the range = +/- 0.5 *freq.'bshift': Shift grid points to the first succeeding time stamp (if any).'fshift': Shift grid points to the last preceeding time stamp (if any).'linear': Ignore the index and treat the values as equally spaced.'time','index','values': Use the actual numerical values of the index.'pad': Fill in NaNs using existing values.'spline','polynomial': Passed toscipy.interpolate.interp1d. These methods use the numerical values of the index. Anordermust be specified, e.g.qc.interpolate(method='polynomial', order=5).'nearest','zero','slinear','quadratic','cubic','barycentric': Passed toscipy.interpolate.interp1d. These methods use the numerical values of the index.'krogh','spline','pchip','akima','cubicspline': Wrappers around the SciPy interpolation methods of similar names.'from_derivatives': Refers toscipy.interpolate.BPoly.from_derivatives.

order (

int(default:2)) – Order of the interpolation method, ignored if not supported by the chosenmethod.extrapolate –

Use parameter to perform extrapolation instead of interpolation onto the trailing and/or leading chunks of NaN values in data series.

None(default) - perform interpolation'forward'/'backward'- perform forward/backward extrapolation'both'- perform forward and backward extrapolation

overwrite (

bool(default:False)) – If set to True, existing flags will be cleared.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- andGroup(field, group=None, target=None, flag=255.0, **kwargs)#

Logical AND operation for Flags.

Flag the variable(s) field at every period, at wich field in all of the saqc objects in group is flagged.

See Examples section for examples.

- Parameters:

field (str | list[str]) – Variable to process.

group (

Optional[Sequence[SaQC]] (default:None)) – A collection ofSaQCobjects. Flag checks are performed on allSaQCobjects based on the variables specified infield. Whenever all monitored variables are flagged, the associated timestamps will receive a flag.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Examples

Flag data, if the values are above a certain threshold (determined by

flagRange()) AND if the values are constant for 3 periods (determined byflagConstants())>>> dat = pd.Series([1,0,0,0,1,2,3,4,5,5,5,4], name='data', index=pd.date_range('2000', freq='10min', periods=12)) >>> qc = saqc.SaQC(dat) >>> qc = qc.andGroup('data', group=[qc.flagRange('data', max=4), qc.flagConstants('data', thresh=0, window=3)]) >>> qc.flags['data'] 2000-01-01 00:00:00 -inf 2000-01-01 00:10:00 -inf 2000-01-01 00:20:00 -inf 2000-01-01 00:30:00 -inf 2000-01-01 00:40:00 -inf 2000-01-01 00:50:00 -inf 2000-01-01 01:00:00 -inf 2000-01-01 01:10:00 -inf 2000-01-01 01:20:00 255.0 2000-01-01 01:30:00 255.0 2000-01-01 01:40:00 255.0 2000-01-01 01:50:00 -inf Freq: 10min, dtype: float64

Masking data, so that a test result only gets assigned during daytime (between 6 and 18 o clock for example). The daytime condition is generated via

flagGeneric():>>> from saqc.lib.tools import periodicMask >>> mask_func = lambda x: ~periodicMask(x.index, '06:00:00', '18:00:00', True) >>> dat = pd.Series(range(100), name='data', index=pd.date_range('2000', freq='4h', periods=100)) >>> qc = saqc.SaQC(dat) >>> qc = qc.andGroup('data', group=[qc.flagRange('data', max=5), qc.flagGeneric('data', func=mask_func)]) >>> qc.flags['data'].head(20) 2000-01-01 00:00:00 -inf 2000-01-01 04:00:00 -inf 2000-01-01 08:00:00 -inf 2000-01-01 12:00:00 -inf 2000-01-01 16:00:00 -inf 2000-01-01 20:00:00 -inf 2000-01-02 00:00:00 -inf 2000-01-02 04:00:00 -inf 2000-01-02 08:00:00 255.0 2000-01-02 12:00:00 255.0 2000-01-02 16:00:00 255.0 2000-01-02 20:00:00 -inf 2000-01-03 00:00:00 -inf 2000-01-03 04:00:00 -inf 2000-01-03 08:00:00 255.0 2000-01-03 12:00:00 255.0 2000-01-03 16:00:00 255.0 2000-01-03 20:00:00 -inf 2000-01-04 00:00:00 -inf 2000-01-04 04:00:00 -inf Freq: 4h, dtype: float64

- assignChangePointCluster(field, stat_func, thresh_func, window, min_periods, reduce_window=None, reduce_func=<function ChangepointsMixin.<lambda>>, model_by_resids=False, **kwargs)#

Label data where it changes significantly.

The labels will be stored in data. Unless target is given the labels will overwrite the data in field. The flags will always set to UNFLAGGED.

Assigns label to the data, aiming to reflect continuous regimes of the processes the data is assumed to be generated by. The regime change points detection is based on a sliding window search.

- Parameters:

field (str | list[str]) – Variable to process.

stat_func (

Callable[[ndarray,ndarray],float]) – A function that assigns a value to every twin window. Left window content will be passed to first variable, right window content will be passed to the second.thresh_func (

Callable[[ndarray,ndarray],float]) – A function that determines the value level, exceeding wich qualifies a timestamps func value as denoting a changepoint.window (

Union[str,Tuple[str,str]]) –Size of the rolling windows the calculation is performed in. If it is a single frequency offset, it applies for the backward- and the forward-facing window.

If two offsets (as a tuple) is passed the first defines the size of the backward facing window, the second the size of the forward facing window.

min_periods (

Union[int,Tuple[int,int]]) – Minimum number of observations in a window required to perform the changepoint test. If it is a tuple of two int, the first refer to the backward-, the second to the forward-facing window.reduce_window (

Optional[str] (default:None)) – The sliding window search method is not an exact CP search method and usually there won’t be detected a single changepoint, but a “region” of change around a changepoint. If reduce_window is given, for every window of size reduce_window, there will be selected the value with index reduce_func(x, y) and the others will be dropped. If reduce_window is None, the reduction window size equals the twin window size, the changepoints have been detected with.reduce_func (default argmax) – A function that must return an index value upon input of two arrays x and y. First input parameter will hold the result from the stat_func evaluation for every reduction window. Second input parameter holds the result from the thresh_func evaluation. The default reduction function just selects the value that maximizes the stat_func.

model_by_resids (

bool(default:False)) – If True, the results of stat_funcs are written, otherwise the regime labels.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- assignKNNScore(field, target, n=10, func='sum', freq=inf, min_periods=2, algorithm='ball_tree', metric='minkowski', p=2, **kwargs)#

Score datapoints by an aggregation of the distances to their k nearest neighbors.

The function is a wrapper around the NearestNeighbors method from pythons sklearn library (See reference [1]).

The steps taken to calculate the scores are as follows:

All the timeseries, given through

field, are combined to one feature space by an inner join on their date time indexes. thus, only samples, that share timestamps across allfieldwill be included in the feature space.Any datapoint/sample, where one ore more of the features is invalid (=np.nan) will get excluded.

For every data point, the distance to its n nearest neighbors is calculated by applying the metric metric at grade p onto the feature space. The defaults lead to the euclidian to be applied. If radius is not None, it sets the upper bound of distance for a neighbor to be considered one of the n nearest neighbors. Furthermore, the freq argument determines wich samples can be included into a datapoints nearest neighbors list, by segmenting the data into chunks of specified temporal extension and feeding that chunks to the kNN algorithm seperatly.

For every datapoint, the calculated nearest neighbors distances get aggregated to a score, by the function passed to func. The default,

sumobviously just sums up the distances.The resulting timeseries of scores gets assigned to the field target.

- Parameters:

field (List[str]) – List of variables names to process.

n (

int(default:10)) – The number of nearest neighbors to which the distance is comprised in every datapoints scoring calculation.func (default sum) – A function that assigns a score to every one dimensional array, containing the distances to every datapoints n nearest neighbors.

freq (

UnionType[float,str,None] (default:inf)) –Determines the segmentation of the data into partitions, the kNN algorithm is applied onto individually.

np.inf: Apply Scoring on whole data set at oncex> 0 : Apply scoring on successive data chunks of periods lengthxOffset String : Apply scoring on successive partitions of temporal extension matching the passed offset string

min_periods (

int(default:2)) – The minimum number of periods that have to be present in a window for the kNN scoring to be applied. If the number of periods present is below min_periods, the score for the datapoints in that window will be np.nan.algorithm (

Literal['ball_tree','kd_tree','brute','auto'] (default:'ball_tree')) – The search algorithm to find each datapoints k nearest neighbors. The keyword just gets passed on to the underlying sklearn method. See reference [1] for more information on the algorithm.metric (

str(default:'minkowski')) – The metric the distances to any datapoints neighbors is computed with. The default of metric together with the default of p result in the euclidian to be applied. The keyword just gets passed on to the underlying sklearn method. See reference [1] for more information on the algorithm.p (

int(default:2)) – The grade of the metrice specified by parameter metric. The keyword just gets passed on to the underlying sklearn method. See reference [1] for more information on the algorithm.target (str | list[str]) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

References

[1] https://scikit-learn.org/stable/modules/generated/sklearn.neighbors.NearestNeighbors.html

- assignLOF(field, target, n=20, freq=inf, min_periods=2, algorithm='ball_tree', p=2, **kwargs)#

Assign Local Outlier Factor (LOF).

- Parameters:

field (List[str]) – List of variables names to process.

n (

int(default:20)) – Number of periods to be included into the LOF calculation. Defaults to 20, which is a value found to be suitable in the literature.freq (

UnionType[float,str,None] (default:inf)) – Determines the segmentation of the data into partitions, the kNN algorithm is applied onto individually.algorithm (

Literal['ball_tree','kd_tree','brute','auto'] (default:'ball_tree')) – Algorithm used for calculating the n-nearest neighbors needed for LOF calculation.p (

int(default:2)) –Degree of the metric (“Minkowski”), according to wich distance to neighbors is determined. Most important values are:

1 - Manhatten Metric

2 - Euclidian Metric

target (str | list[str]) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

n determines the “locality” of an observation (its n nearest neighbors) and sets the upper limit of values of an outlier clusters (i.e. consecutive outliers). Outlier clusters of size greater than n/2 may not be detected reliably.

The larger n, the lesser the algorithm’s sensitivity to local outliers and small or singleton outliers points. Higher values greatly increase numerical costs.

- assignRegimeAnomaly(field, cluster_field, spread, method='single', metric=<function DriftMixin.<lambda>>, frac=0.5, **kwargs)#

A function to detect values belonging to an anomalous regime regarding modelling regimes of field.

The function changes the value of the regime cluster labels to be negative. “Normality” is determined in terms of a maximum spreading distance, regimes must not exceed in respect to a certain metric and linkage method. In addition, only a range of regimes is considered “normal”, if it models more then frac percentage of the valid samples in “field”. Note, that you must detect the regime changepoints prior to calling this function. (They are expected to be stored parameter cluster_field.)

Note, that it is possible to perform hypothesis tests for regime equality by passing the metric a function for p-value calculation and selecting linkage method “complete”.

- Parameters:

field (str | list[str]) – Variable to process.

cluster_field (

str) – Column in data, holding the cluster labels for the samples in field. (has to be indexed equal to field)spread (

float) – A threshold denoting the value level, up to wich clusters a agglomerated.method (

Literal['single','complete','average','weighted','centroid','median','ward'] (default:'single')) – The linkage method for hierarchical (agglomerative) clustering of the variables.metric (

Callable[[ndarray,ndarray],float] (default:<function DriftMixin.<lambda> at 0x7f21149a7ba0>)) – A metric function for calculating the dissimilarity between 2 regimes. Defaults to the absolute difference in mean.frac (

float(default:0.5)) – The minimum percentage of samples, the “normal” group has to comprise to actually be the normal group. Must be in the closed interval [0,1], otherwise a ValueError is raised.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- assignUniLOF(field, n=20, algorithm='ball_tree', p=1, density='auto', fill_na=True, **kwargs)#

Assign “univariate” Local Outlier Factor (LOF).

The Function is a wrapper around a usual LOF implementation, aiming for an easy to use, parameter minimal outlier scoring function for singleton variables, that does not necessitate prior modelling of the variable. LOF is applied onto a concatenation of the field variable and a “temporal density”, or “penalty” variable, that measures temporal distance between data points.

See the Notes section for more details on the algorithm.

- Parameters:

field (str | list[str]) – Variable to process.

n (

int(default:20)) –Number of periods to be included into the LOF calculation. Defaults to 20, which is a value found to be suitable in the literature.

n determines the “locality” of an observation (its n nearest neighbors) and sets the upper limit of values of an outlier clusters (i.e. consecutive outliers). Outlier clusters of size greater than n/2 may not be detected reliably.

The larger n, the lesser the algorithm’s sensitivity to local outliers and small or singleton outliers points. Higher values greatly increase numerical costs.

algorithm (

Literal['ball_tree','kd_tree','brute','auto'] (default:'ball_tree')) – Algorithm used for calculating the n-nearest neighbors needed for LOF calculation.p (

int(default:1)) –Degree of the metric (“Minkowski”), according to wich distance to neighbors is determined. Most important values are:

1 - Manhatten Metric

2 - Euclidian Metric

density (

Union[Literal['auto'],float] (default:'auto')) –How to calculate the temporal distance/density for the variable-to-be-flagged.

float - introduces linear density with an increment equal to density

Callable - calculates the density by applying the function passed onto the variable to be flagged (passed as Series).

fill_na (

bool(default:True)) – If True, NaNs in the data are filled with a linear interpolation.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

Algorithm steps for uniLOF flagging of variable x:

The temporal density dt(x) is calculated according to the density parameter.

LOF scores LOF(x) are calculated for the concatenation [x, dt(x)]

x is flagged where LOF(x) exceeds the threshold determined by the parameter thresh.

- assignZScore(field, window=None, norm_func='std', model_func='mean', center=True, min_periods=None, **kwargs)#

Calculate (rolling) Zscores.

See the Notes section for a detailed overview of the calculation

- Parameters:

field (str | list[str]) – Variable to process.

window (

Optional[str] (default:None)) – Size of the window. can be determined as: * Offset String, denoting the windows temporal extension * Integer, denoting the windows number of periods. * None (default), All data points share the same scoring window, which than equals the whole data.model_func (default std) – Function to calculate the center moment in every window.

norm_func (default mean) – Function to calculate the scaling for every window

center (

bool(default:True)) – Weather or not to center the target value in the scoring window. If False, the target value is the last value in the window.min_periods (

Optional[int] (default:None)) – Minimum number of valid meassurements in a scoring window, to consider the resulting score valid.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

Steps of calculation:

1. Consider a window \(W\) of successive points \(W = x_{1},...x_{w}\) containing the value \(y_{K}\) wich is to be checked. (The index of \(K\) depends on the selection of the parameter center.)

The “moment” \(M\) for the window gets calculated via \(M=\) model_func(\(W\))

The “scaling” \(N\) for the window gets calculated via \(N=\) norm_func(\(W\))

The “score” \(S\) for the point \(x_{k}`gets calculated via :math:`S=(x_{k} - M) / N\)

- calculatePolynomialResiduals(field, window, order, min_periods=0, **kwargs)#

Fits a polynomial model to the data and calculate the residuals.

The residual is calculated by fitting a polynomial of degree order to a data slice of size window, that has x at its center.

Note, that calculating the residuals tends to be quite costly, because a function fitting is performed for every sample. To improve performance, consider the following possibilities:

In case your data is sampled at an equidistant frequency grid:

(1) If you know your data to have no significant number of missing values, or if you do not want to calculate residuals for windows containing missing values any way, performance can be increased by setting min_periods=window.

Note, that the initial and final window/2 values do not get fitted.

Each residual gets assigned the worst flag present in the interval of the original data.

- Parameters:

field (str | list[str]) – Variable to process.

window (

str|int) – The size of the window you want to use for fitting. If an integer is passed, the size refers to the number of periods for every fitting window. If an offset string is passed, the size refers to the total temporal extension. The window will be centered around the vaule-to-be-fitted. For regularly sampled timeseries the period number will be casted down to an odd number if even.order (

int) – The degree of the polynomial used for fittingmin_periods (

int(default:0)) – The minimum number of periods, that has to be available in every values fitting surrounding for the polynomial fit to be performed. If there are not enough values, np.nan gets assigned. Default (0) results in fitting regardless of the number of values present (results in overfitting for too sparse intervals). To automatically set the minimum number of periods to the number of values in an offset defined window size, pass np.nan.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- calculateRollingResiduals(field, window, func='mean', min_periods=0, center=True, **kwargs)#

Calculate the diff of a rolling-window function and the data.

Note, that the data gets assigned the worst flag present in the original data.

- Parameters:

field (str | list[str]) – Variable to process.

window (

str|int) – The size of the window you want to roll with. If an integer is passed, the size refers to the number of periods for every fitting window. If an offset string is passed, the size refers to the total temporal extension. For regularly sampled timeseries, the period number will be casted down to an odd number ifcenter=True.func (default mean) – Function to roll with.

min_periods (

int(default:0)) – The minimum number of periods to get a valid valuecenter (

bool(default:True)) – If True, center the rolling window.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- clearFlags(field, **kwargs)#

Assign UNFLAGGED value to all periods in field.

- Parameters:

field (str | list[str]) – Variable to process.

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

This function ignores the

dfilterkeyword, because the data is not relevant for processing. A warning is triggered if theflagkeyword is given, because the flags are always set to UNFLAGGED.See also

forceFlagsset whole column to a flag value

flagUnflaggedset flag value at all unflagged positions

- concatFlags(field, target=None, method='auto', invert=True, freq=None, drop=False, squeeze=False, override=False, **kwargs)#

Project flags/history of

fieldtotargetand adjust to the frequeny grid oftargetby ‘undoing’ former interpolation, shifting or resampling operations- Parameters:

field (str | list[str]) – Variable to process.

method (

Literal['fagg','bagg','nagg','fshift','bshift','nshift','sshift','match','auto'] (default:'auto')) –Method to project the flags of

fieldto the flags totarget:'auto': invert the last alignment/resampling operation (that is not already inverted)'nagg': project a flag offieldto all timestamps oftargetwithin the range +/-freq/2.'bagg': project a flag offieldto all preceeding timestamps oftargetwithin the rangefreq'fagg': project a flag offieldto all succeeding timestamps oftargetwithin the rangefreq'interpolation'- project a flag offieldto all timestamps oftargetwithin the range +/-freq'sshift'- same as interpolation'nshift'- project a flag offieldto the neaerest timestamps intargetwithin the range +/-freq/2'bshift'- project a flag offieldto nearest preceeding timestamps intarget'nshift'- project a flag offieldto nearest succeeding timestamps intarget'match'- project a flag offieldto all identical timestampstarget

invert (

bool(default:True)) – If True, not the actual method is applied, but its inversion-method.freq (

Optional[str] (default:None)) – Projection range. IfNonethe sampling frequency offieldis used.drop (

bool(default:False)) – RemovefieldifTruesqueeze (

bool(default:False)) – Squeeze the history into a single column ifTrue, function specific flag information is lost.override (

bool(default:False)) – Overwrite existing flags ifTruetarget (str | list[str]) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

To just use the appropriate inversion with regard to a certain method, set the invert parameter to True and pass the method you want to invert.

To backtrack a preveous resampling, shifting or interpolation operation automatically, set method=’auto’

- copyField(field, target, overwrite=False, **kwargs)#

Make a copy of the data and flags of field.

- Parameters:

field (str | list[str]) – Variable to process.

overwrite (

bool(default:False)) – overwrite target, if already existant.target (str | list[str]) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- correctDrift(field, maintenance_field, model, cal_range=5, **kwargs)#

The function corrects drifting behavior.

See the Notes section for an overview over the correction algorithm.

- Parameters:

field (str | list[str]) – Variable to process.

maintenance_field (

str) – Column holding the support-points information. The data is expected to have the following form: The index of the series represents the beginning of a maintenance event, wheras the values represent its endings.model (

Union[Callable[...,float],Literal['linear','exponential']]) – A model function describing the drift behavior, that is to be corrected. Either use built-in exponential or linear drift model by passing a string, or pass a custom callable. The model function must always contain the keyword parameters ‘origin’ and ‘target’. The starting parameter must always be the parameter, by wich the data is passed to the model. After the data parameter, there can occure an arbitrary number of model calibration arguments in the signature. See the Notes section for an extensive description.cal_range (

int(default:5)) – Number of values to calculate the mean of, for obtaining the value level directly after and directly before a maintenance event. Needed for shift calibration.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

It is assumed, that between support points, there is a drift effect shifting the meassurements in a way, that can be described, by a model function M(t, p, origin, target). (With 0<=t<=1, p being a parameter set, and origin, target being floats).

Note, that its possible for the model to have no free parameters p at all (linear drift mainly).

The drift model, directly after the last support point (t=0), should evaluate to the origin - calibration level (origin), and directly before the next support point (t=1), it should evaluate to the target calibration level (target).

M(0, p, origin, target) = origin M(1, p, origin, target) = target

The model is than fitted to any data chunk in between support points, by optimizing the parameters p, and thus, obtaining optimal parameterset P.

The new values at t are computed via::

new_vals(t) = old_vals(t) + M(t, P, origin, target) - M_drift(t, P, origin, new_target)

Wheras

new_targetrepresents the value level immediately after the next support point.Examples

Some examples of meaningful driftmodels.

Linear drift modell (no free parameters).

>>> Model = lambda t, origin, target: origin + t*target

exponential drift model (exponential raise!)

>>> expFunc = lambda t, a, b, c: a + b * (np.exp(c * x) - 1) >>> Model = lambda t, p, origin, target: expFunc(t, (target - origin) / (np.exp(abs(c)) - 1), abs(c))

Exponential and linear driftmodels are part of the

ts_operatorslibrary, under the namesexpDriftModelandlinearDriftModel.

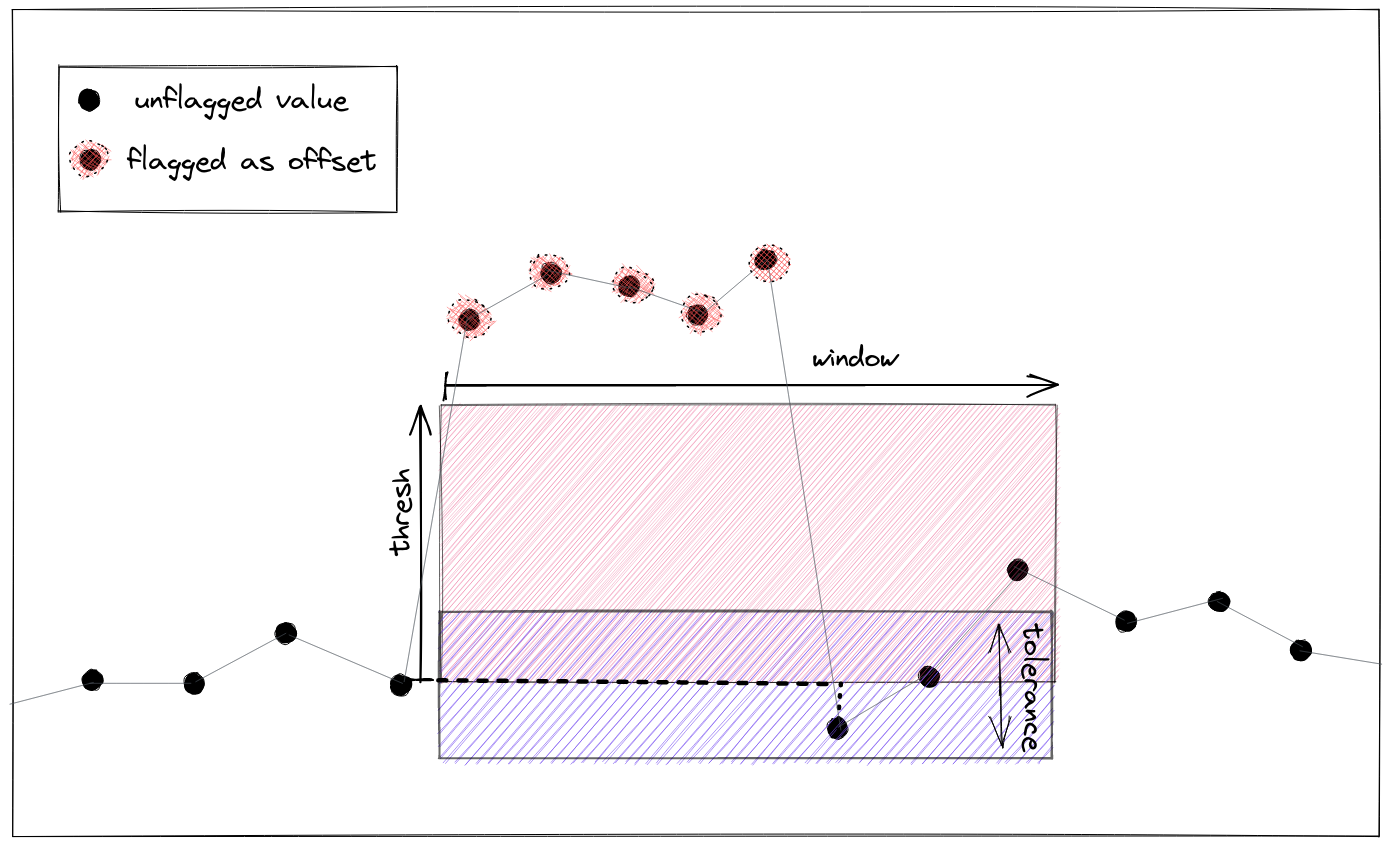

- correctOffset(field, max_jump, spread, window, min_periods, tolerance=None, **kwargs)#

- Parameters:

field (str | list[str]) – Variable to process.

max_jump (

float) – when searching for changepoints in mean - this is the threshold a mean difference in the sliding window search must exceed to trigger changepoint detection.spread (

float) – threshold denoting the maximum, regimes are allowed to abolutely differ in their means to form the “normal group” of values.window (

str) – Size of the adjacent windows that are used to search for the mean changepoints.min_periods (

int) – Minimum number of periods a search window has to contain, for the result of the changepoint detection to be considered valid.tolerance (

Optional[str] (default:None)) – If an offset string is passed, a data chunk of length offset right from the start and right before the end of any regime is ignored when calculating a regimes mean for data correcture. This is to account for the unrelyability of data near the changepoints of regimes.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- correctRegimeAnomaly(field, cluster_field, model, tolerance=None, epoch=False, **kwargs)#

Function fits the passed model to the different regimes in data[field] and tries to correct those values, that have assigned a negative label by data[cluster_field].

Currently, the only correction mode supported is the “parameter propagation.”

This means, any regime \(z\), labeled negatively and being modeled by the parameters p, gets corrected via:

\(z_{correct} = z + (m(p^*) - m(p))\),

where \(p^*\) denotes the parameter set belonging to the fit of the nearest not-negatively labeled cluster.

- Parameters:

field (str | list[str]) – Variable to process.

cluster_field (

str) – A string denoting the field in data, holding the cluster label for the data you want to correct.model (

CurveFitter) – The model function to be fitted to the regimes. It must be a function of the form \(f(x, *p)\), where \(x\) is thenumpy.arrayholding the independent variables and \(p\) are the model parameters that are to be obtained by fitting. Depending on the x_date parameter, independent variable x will either be the timestamps of every regime transformed to seconds from epoch, or it will be just seconds, counting the regimes length.tolerance (

Optional[str] (default:None)) – If an offset string is passed, a data chunk of length offset right at the start and right at the end is ignored when fitting the model. This is to account for the unreliability of data near the changepoints of regimes. Defaults to None.epoch (

bool(default:False)) – If True, use “seconds from epoch” as x input to the model func, instead of “seconds from regime start”.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- dropField(field, **kwargs)#

Drops field from the data and flags.

- Parameters:

field (str | list[str]) – Variable to process.

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- fitLowpassFilter(field, cutoff, nyq=0.5, filter_order=2, fill_method='linear', **kwargs)#

Fits the data using the butterworth filter.

- Parameters:

field (str | list[str]) – Variable to process.

cutoff (

float|str) – The cutoff-frequency, either an offset freq string, or expressed in multiples of the sampling rate.nyq (

float(default:0.5)) – The niquist-frequency. expressed in multiples if the sampling rate.fill_method (

Literal['linear','nearest','zero','slinear','quadratic','cubic','spline','barycentric','polynomial'] (default:'linear')) – Fill method to be applied on the data before filtering (butterfilter cant handle ‘’np.nan’’). See documentation of pandas.Series.interpolate method for details on the methods associated with the different keywords.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

The data is expected to be regularly sampled.

- fitPolynomial(field, window, order, min_periods=0, **kwargs)#

Fits a polynomial model to the data.

The fit is calculated by fitting a polynomial of degree order to a data slice of size window, that has x at its center.

Note that the result is stored in field and overwrite it unless a target is given.

In case your data is sampled at an equidistant frequency grid:

(1) If you know your data to have no significant number of missing values, or if you do not want to calculate residuals for windows containing missing values any way, performance can be increased by setting min_periods=window.

Note, that the initial and final window/2 values do not get fitted.

Each residual gets assigned the worst flag present in the interval of the original data.

- Parameters:

field (str | list[str]) – Variable to process.

window (

int|str) – Size of the window you want to use for fitting. If an integer is passed, the size refers to the number of periods for every fitting window. If an offset string is passed, the size refers to the total temporal extension. The window will be centered around the vaule-to-be-fitted. For regularly sampled data always a odd number of periods will be used for the fit (periods-1 if periods is even).order (

int) – Degree of the polynomial used for fittingmin_periods (

int(default:0)) – Minimum number of observations in a window required to perform the fit, otherwise NaNs will be assigned. IfNone, min_periods defaults to 1 for integer windows and to the size of the window for offset based windows. Passing 0, disables the feature and will result in over-fitting for too sparse windows.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagByClick(field, max_gap=None, gui_mode='GUI', selection_marker_kwargs=None, dfilter=255.0, **kwargs)#

Pop up GUI for adding or removing flags by selection of points in the data plot.

Left click and Drag the selection area over the points you want to add to selection.

Right clack and drag the selection area over the points you want to remove from selection

press ‘shift’ to switch between rectangle and span selector

press ‘enter’ or click “Assign Flags” to assign flags to the selected points and end session

press ‘escape’ or click “Discard” to end Session without assigneing flags to selection

activate the sliders attached to each axes to bind the respective variable. When using the span selector, points from all bound variables will be added synchronously.

Note, that you can only mark already flagged values, if dfilter is set accordingly.

Note, that you can use flagByClick to “unflag” already flagged values, when setting dfilter above the flag to “unset”, and setting flag to a flagging level associated with your “unflagged” level.

- Parameters:

field (str | list[str]) – Variable to process.

max_gap (

Optional[str] (default:None)) – IfNone, all data points will be connected, resulting in long linear lines, in case of large data gaps.NaNvalues will be removed before plotting. If an offset string is passed, only points that have a distance belowmax_gapare connected via the plotting line.gui_mode (

Literal['GUI','overlay'] (default:'GUI')) –"GUI"(default), spawns TK based pop-up GUI, enabling scrolling and binding for subplots"overlay", spawns matplotlib based pop-up GUI. May be less conflicting, but does not support scrolling or binding.

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagByGrubbs(field, window, alpha=0.05, min_periods=8, pedantic=False, flag=255.0, **kwargs)#

Flag outliers using the Grubbs algorithm.

Deprecated since version 2.6.0: Use

flagUniLOF()orflagZScore()instead.- Parameters:

field (str | list[str]) – Variable to process.

window (

str|int) – Size of the testing window. If an integer, the fixed number of observations used for each window. If an offset string the time period of each window.alpha (

float(default:0.05)) – Level of significance, the grubbs test is to be performed at. Must be between 0 and 1.min_periods (

int(default:8)) – Minimum number of values needed in awindowin order to perform the grubs test. Ignored ifwindowis an integer.pedantic (

bool(default:False)) – IfTrue, every value gets checked twice. First in the initial rollingwindowand second in a rolling window that is lagging bywindow/ 2. Recommended to avoid false positives at the window edges. Ignored ifwindowis an offset string.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

References

introduction to the grubbs test:

[1] https://en.wikipedia.org/wiki/Grubbs%27s_test_for_outliers

- flagByScatterLowpass(field, window, thresh, func='std', sub_window=None, sub_thresh=None, min_periods=None, flag=255.0, **kwargs)#

Flag data chunks of length

windowdependent on the data deviation.Flag data chunks of length

windowifthey excexceed

threshwith regard tofuncandall (maybe overlapping) sub-chunks of the data chunks with length

sub_window, exceedsub_threshwith regard tofunc

- Parameters:

field (str | list[str]) – Variable to process.

func (

Union[Literal['std','var','mad'],Callable[[ndarray,Series],float]] (default:'std')) –Either a string, determining the aggregation function applied on every chunk:

’std’: standard deviation

’var’: variance

’mad’: median absolute deviation

Or a Callable, mapping 1 dimensional array likes onto scalars.

window (

str|Timedelta) – Window (i.e. chunk) size.thresh (

float) – Threshold. A given chunk is flagged, if the return value offuncexcceedsthresh.sub_window (

UnionType[str,Timedelta,None] (default:None)) – Window size of sub chunks, that are additionally tested for exceedingsub_threshwith respect tofunc.sub_thresh (

Optional[float] (default:None)) – Threshold. A given sub chunk is flagged, if the return value offunc` excceeds ``sub_thresh.min_periods (

Optional[int] (default:None)) – Minimum number of values needed in a chunk to perfom the test. Ignored ifwindowis an integer.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagByStatLowPass(field, window, thresh, func='std', sub_window=None, sub_thresh=None, min_periods=None, flag=255.0, **kwargs)#

Flag data chunks of length

windowdependent on the data deviation.Flag data chunks of length

windowifthey excexceed

threshwith regard tofuncandall (maybe overlapping) sub-chunks of the data chunks with length

sub_window, exceedsub_threshwith regard tofuncDeprecated since version 2.5.0: Deprecated Function. See

flagByScatterLowpass().

- Parameters:

func (

Union[Literal['std','var','mad'],Callable[[ndarray,Series],float]] (default:'std')) –Either a String value, determining the aggregation function applied on every chunk.

’std’: standard deviation

’var’: variance

’mad’: median absolute deviation

Or a Callable function mapping 1 dimensional arraylikes onto scalars.

window (

str|Timedelta) – Window (i.e. chunk) size.thresh (

float) – Threshold. A given chunk is flagged, if the return value offuncexcceedsthresh.sub_window (

UnionType[str,Timedelta,None] (default:None)) – Window size of sub chunks, that are additionally tested for exceedingsub_threshwith respect tofunc.sub_thresh (

Optional[float] (default:None)) – Threshold. A given sub chunk is flagged, if the return value offunc` excceeds ``sub_thresh.min_periods (

Optional[int] (default:None)) – Minimum number of values needed in a chunk to perfom the test. Ignored ifwindowis an integer.

- Return type:

- flagByStray(field, window=None, min_periods=11, iter_start=0.5, alpha=0.05, flag=255.0, **kwargs)#

Flag outliers in 1-dimensional (score) data using the STRAY Algorithm.

For more details about the algorithm please refer to [1].

- Parameters:

field (str | list[str]) – Variable to process.

window (

UnionType[int,str,None] (default:None)) –Determines the segmentation of the data into partitions, the kNN algorithm is applied onto individually.

None: Apply Scoring on whole data set at onceint: Apply scoring on successive data chunks of periods with the given length. Must be greater than 0.offset String : Apply scoring on successive partitions of temporal extension matching the passed offset string

min_periods (

int(default:11)) – Minimum number of periods per partition that have to be present for a valid outlier detection to be made in this partitioniter_start (

float(default:0.5)) – Float in[0, 1]that determines which percentage of data is considered “normal”.0.5results in the stray algorithm to search only the upper 50% of the scores for the cut off point. (See reference section for more information)alpha (

float(default:0.05)) – Level of significance by which it is tested, if a score might be drawn from another distribution than the majority of the data.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

References

- [1] Priyanga Dilini Talagala, Rob J. Hyndman & Kate Smith-Miles (2021):

Anomaly Detection in High-Dimensional Data, Journal of Computational and Graphical Statistics, 30:2, 360-374, DOI: 10.1080/10618600.2020.1807997

- flagByVariance(field, window, thresh, maxna=None, maxna_group=None, flag=255.0, **kwargs)#

Flag low-variance data.

Flags plateaus of constant data if the variance in a rolling window does not exceed a certain threshold.

Any interval of values y(t),..y(t+n) is flagged, if:

n > window

variance(y(t),…,y(t+n) < thresh

- Parameters:

field (str | list[str]) – Variable to process.

window (

str) – Size of the moving window. This is the number of observations used for calculating the statistic. Each window will be a fixed size. If its an offset then this will be the time period of each window. Each window will be sized, based on the number of observations included in the time-period.thresh (

float) – Maximum total variance allowed per window.maxna (

Optional[int] (default:None)) – Maximum number of NaNs allowed in window. If more NaNs are present, the window is not flagged.maxna_group (

Optional[int] (default:None)) – Same as maxna but for consecutive NaNs.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagChangePoints(field, stat_func, thresh_func, window, min_periods, reduce_window=None, reduce_func=<function ChangepointsMixin.<lambda>>, flag=255.0, **kwargs)#

Flag values that represent a system state transition.

Flag data points, where the parametrization of the assumed process generating this data, significantly changes.

- Parameters:

field (str | list[str]) – Variable to process.

stat_func (

Callable[[ndarray,ndarray],float]) – A function that assigns a value to every twin window. The backward-facing window content will be passed as the first array, the forward-facing window content as the second.thresh_func (

Callable[[ndarray,ndarray],float]) – A function that determines the value level, exceeding wich qualifies a timestamps func value as denoting a change-point.window (

Union[str,Tuple[str,str]]) –Size of the moving windows. This is the number of observations used for calculating the statistic.

If it is a single frequency offset, it applies for the backward- and the forward-facing window.

If two offsets (as a tuple) is passed the first defines the size of the backward facing window, the second the size of the forward facing window.

min_periods (

Union[int,Tuple[int,int]]) – Minimum number of observations in a window required to perform the changepoint test. If it is a tuple of two int, the first refer to the backward-, the second to the forward-facing window.reduce_window (

Optional[str] (default:None)) –The sliding window search method is not an exact CP search method and usually there wont be detected a single changepoint, but a “region” of change around a changepoint.

If reduce_window is given, for every window of size reduce_window, there will be selected the value with index reduce_func(x, y) and the others will be dropped.

If reduce_window is None, the reduction window size equals the twin window size, the changepoints have been detected with.

reduce_func (default argmax) – A function that must return an index value upon input of two arrays x and y. First input parameter will hold the result from the stat_func evaluation for every reduction window. Second input parameter holds the result from the thresh_func evaluation. The default reduction function just selects the value that maximizes the stat_func.

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagConstants(field, thresh, window, min_periods=2, flag=255.0, **kwargs)#

Flag constant data values.

Flags plateaus of constant data if their maximum total change in a rolling window does not exceed a certain threshold.

- Any interval of values y(t),…,y(t+n) is flagged, if:

(1): n >

window(2): abs(y(t + i) - (t + j)) < thresh, for all i,j in [0, 1, …, n]

- Parameters:

field (str | list[str]) – Variable to process.

thresh (

float) – Maximum total change allowed per window.window (

int|str) – Size of the moving window. This determines the number of observations used for calculating the absolute change per window. Each window will either contain a fixed number of periods (integer defined window), or will have a fixed temporal extension (offset defined window).min_periods (

int(default:2)) – Minimum number of observations in window required to generate a flag. This can be used to exclude underpopulated offset defined windows from flagging. (Integer defined windows will always contain exactly window samples). Must be an integer greater or equal 2, because a single value would always be considered constant. Defaults to 2.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagDriftFromNorm(field, window, spread, frac=0.5, metric=<function cityblock>, method='single', flag=255.0, **kwargs)#

Flags data that deviates from an avarage data course.

“Normality” is determined in terms of a maximum spreading distance, that members of a normal group must not exceed. In addition, only a group is considered “normal” if it contains more then frac percent of the variables in “field”.

See the Notes section for a more detailed presentation of the algorithm

- Parameters:

field (str | list[str]) – Variable to process.

window (

str) – Frequency, that split the data in chunks.spread (

float) – Maximum spread allowed in the group of normal data. See Notes section for more details.frac (

float(default:0.5)) – Fraction defining the normal group. Use a value from the interval [0,1]. The higher the value, the more stable the algorithm will be. For values below 0.5 the results are undefined.metric (default cityblock) – Distance function that takes two arrays as input and returns a scalar float. This value is interpreted as the distance of the two input arrays. Defaults to the averaged manhattan metric (see Notes).

method (

Literal['single','complete','average','weighted','centroid','median','ward'] (default:'single')) – Linkage method used for hierarchical (agglomerative) clustering of the data. method is directly passed toscipy.hierarchy.linkage. See its documentation [1] for more details. For a general introduction on hierarchical clustering see [2].target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

following steps are performed for every data “segment” of length freq in order to find the “abnormal” data:

Calculate distances \(d(x_i,x_j)\) for all \(x_i\) in parameter field. (with \(d\) denoting the distance function, specified by metric.

Calculate a dendogram with a hierarchical linkage algorithm, specified by method.

Flatten the dendogram at the level, the agglomeration costs exceed spread

check if a cluster containing more than frac variables.

if yes: flag all the variables that are not in that cluster (inside the segment)

if no: flag nothing

The main parameter giving control over the algorithms behavior is the spread parameter, that determines the maximum spread of a normal group by limiting the costs, a cluster agglomeration must not exceed in every linkage step. For singleton clusters, that costs just equal half the distance, the data in the clusters, have to each other. So, no data can be clustered together, that are more then 2*`spread` distances away from each other. When data get clustered together, this new clusters distance to all the other data/clusters is calculated according to the linkage method specified by method. By default, it is the minimum distance, the members of the clusters have to each other. Having that in mind, it is advisable to choose a distance function, that can be well interpreted in the units dimension of the measurement and where the interpretation is invariant over the length of the data. That is, why, the “averaged manhattan metric” is set as the metric default, since it corresponds to the averaged value distance, two data sets have (as opposed by euclidean, for example).

References

- Documentation of the underlying hierarchical clustering algorithm:

[1] https://docs.scipy.org/doc/scipy/reference/generated/scipy.cluster.hierarchy.linkage.html

- Introduction to Hierarchical clustering:

- flagDriftFromReference(field, reference, freq, thresh, metric=<function cityblock>, flag=255.0, **kwargs)#

Flags data that deviates from a reference course. Deviation is measured by a custom distance function.

- Parameters:

field (str | list[str]) – Variable to process.

freq (

str) – Frequency, that split the data in chunks.reference (

str) – Reference variable, the deviation is calculated from.thresh (

float) – Maximum deviation from reference.metric (default cityblock) – Distance function. Takes two arrays as input and returns a scalar float. This value is interpreted as the mutual distance of the two input arrays. Defaults to the averaged manhattan metric (see Notes).

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

It is advisable to choose a distance function, that can be well interpreted in the units dimension of the measurement and where the interpretation is invariant over the length of the data. That is, why, the “averaged manhatten metric” is set as the metric default, since it corresponds to the averaged value distance, two data sets have (as opposed by euclidean, for example).

- flagDummy(field, **kwargs)#

Function does nothing but returning data and flags.

- Parameters:

field (str | list[str]) – Variable to process.

target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

- flagGeneric(field, func, target=None, flag=255.0, **kwargs)#

Flag data based on a given function.

Evaluate

funcon all variables given infield.- Parameters:

field (str | list[str]) – Variable to process.

func (

GenericFunction) – Function to call. The function needs to accept the same number of arguments (of type pandas.Series) as variables given infieldand return an iterable of array-like objects of data typeboolwith the same length astarget.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Examples

Flag the variable ‘rainfall’, if the sum of the variables ‘temperature’ and ‘uncertainty’ is below zero:

qc.flagGeneric(field=["temperature", "uncertainty"], target="rainfall", func= lambda x, y: x + y < 0)

Flag the variable ‘temperature’, where the variable ‘fan’ is flagged:

qc.flagGeneric(field="fan", target="temperature", func=lambda x: isflagged(x))

The generic functions also support all pandas and numpy functions:

qc = qc.flagGeneric(field="fan", target="temperature", func=lambda x: np.sqrt(x) < 7)

- flagIsolated(field, gap_window, group_window, flag=255.0, **kwargs)#

Find and flag temporal isolated groups of data.

The function flags arbitrarily large groups of values, if they are surrounded by sufficiently large data gaps. A gap is a timespan containing either no data at all or NaNs only.

- Parameters:

field (str | list[str]) – Variable to process.

gap_window (

str) – Minimum gap size required before and after a data group to consider it isolated. See condition (2) and (3)group_window (

str) – Maximum size of a data chunk to consider it a candidate for an isolated group. Data chunks that are bigger than thegroup_windoware ignored. This does not include the possible gaps surrounding it. See condition (1).target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Notes

A series of values \(x_k,x_{k+1},...,x_{k+n}\), with associated timestamps \(t_k,t_{k+1},...,t_{k+n}\), is considered to be isolated, if:

\(t_{k+1} - t_n <\) group_window

None of the \(x_j\) with \(0 < t_k - t_j <\) gap_window, is valid (preceding gap).

None of the \(x_j\) with \(0 < t_j - t_(k+n) <\) gap_window, is valid (succeeding gap).

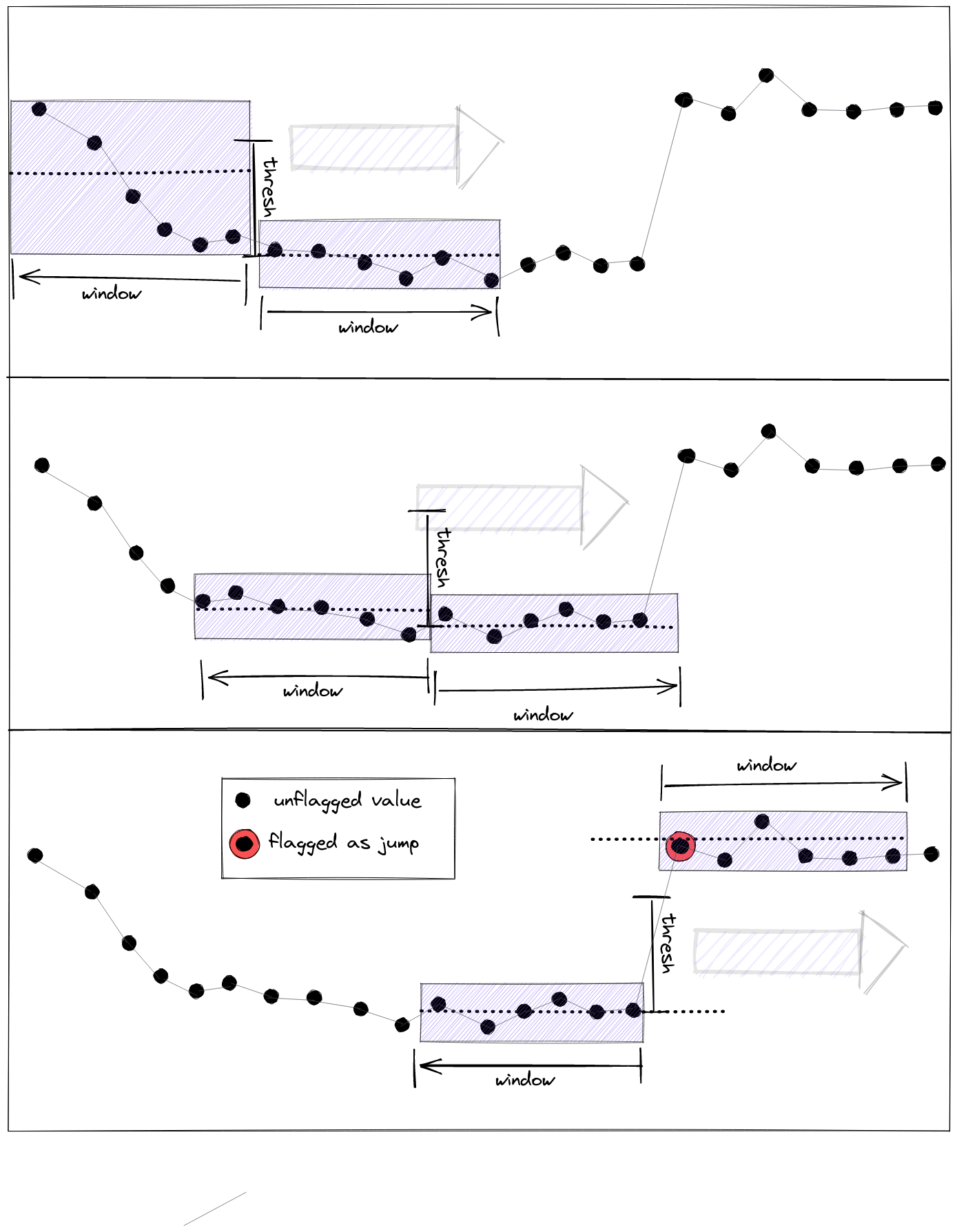

- flagJumps(field, thresh, window, min_periods=1, flag=255.0, dfilter=-inf, **kwargs)#

Flag jumps and drops in data.

Flag data where the mean of its values significantly changes (where the data “jumps” from one value level to another). Value changes are detected by comparing the mean for two adjacent rolling windows. Whenever the difference between the mean in the two windows exceeds

thresh, the value between the windows is flagged.- Parameters:

field (str | list[str]) – Variable to process.

thresh (

float) – Threshold value by which the mean of data has to jump, to trigger flagging.window (

str) – Size of the two moving windows. This determines the number of observations used for calculating the mean in every window. The window size should be big enough to yield enough samples for a reliable mean calculation, but it should also not be arbitrarily big, since it also limits the density of jumps that can be detected. More precisely: Jumps that are not distanced to each other by more than three fourth (3/4) of the selectedwindowsize, will not be detected reliably.min_periods (

int(default:1)) – The minimum number of observations inwindowrequired to calculate a valid mean value.target (str | list[str], optional) – Variable name to which the results are written.

targetwill be created if it does not exist. Defaults tofield.dfilter (Any, optional) – Defines which observations will be masked based on the already existing flags. Any data point with a flag equal or worse to this threshold will be passed as

NaNto the function. Defaults to theDFILTER_ALLvalue of the translation scheme.flag (Any, optional) – The flag value the function uses to mark observations. Defaults to the

BADvalue of the translation scheme.

- Returns:

SaQC – the updated SaQC object

- Return type:

Examples

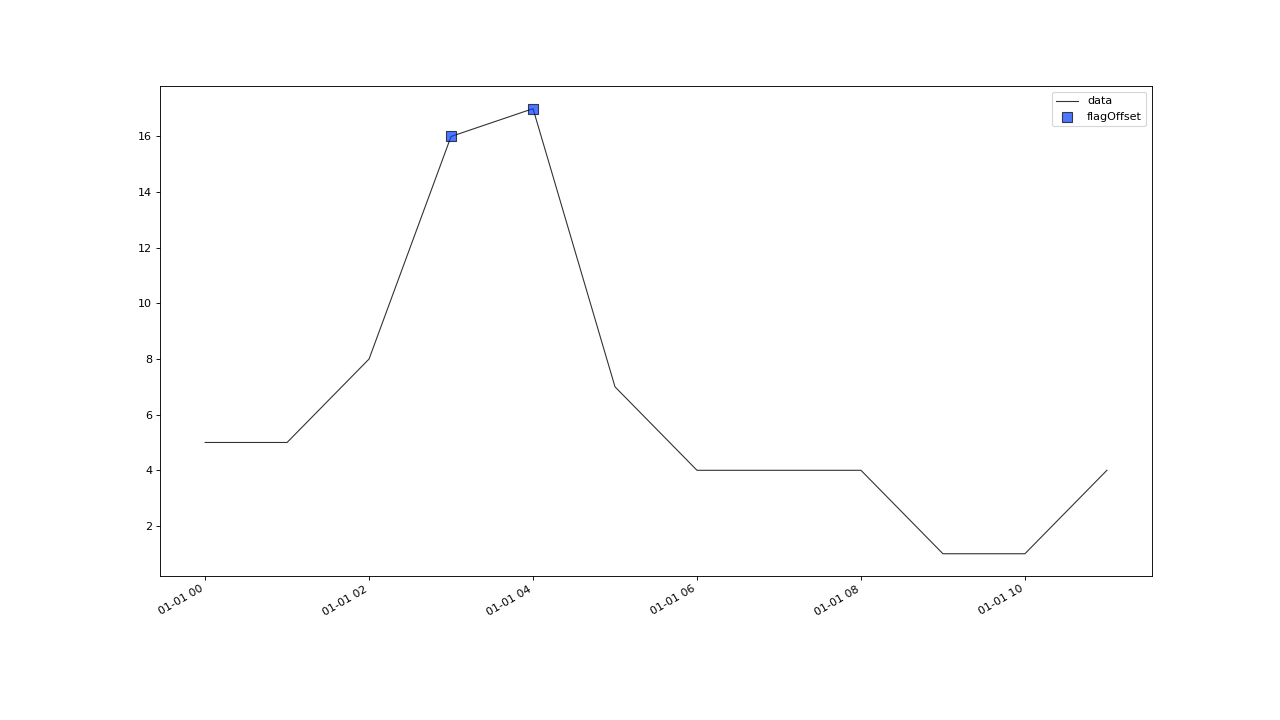

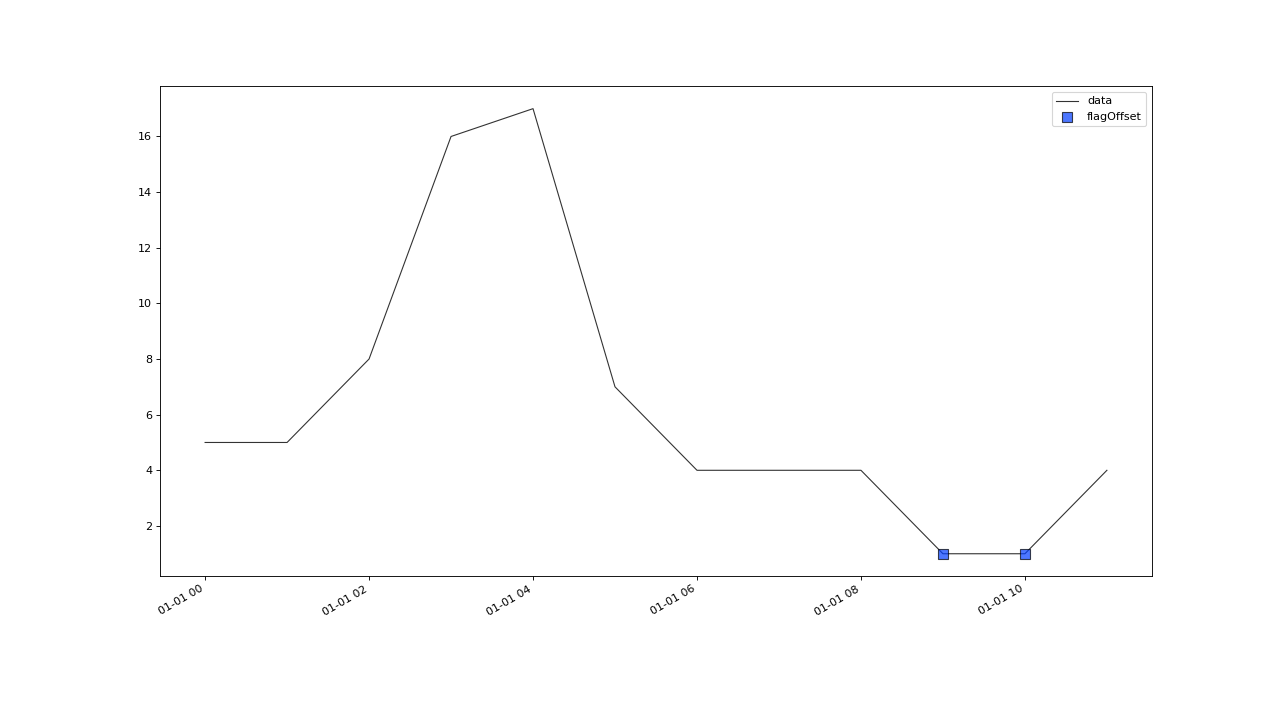

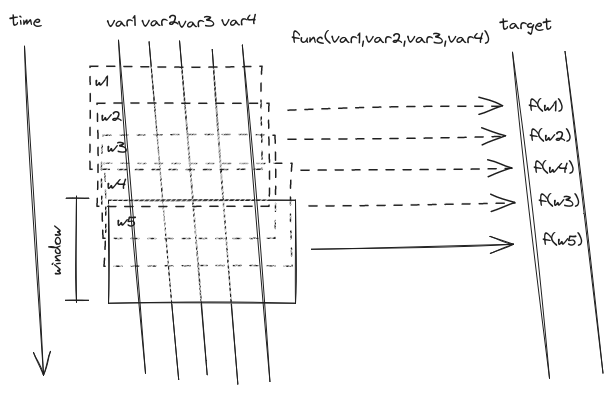

Below picture gives an abstract interpretation of the parameter interplay in case of a positive value jump, initialising a new mean level.

The two adjacent windows of size window roll through the whole data series. Whenever the mean values in the two windows differ by more than thresh, flagging is triggered.#

Notes

Jumps that are not distanced to each other by more than three fourth (3/4) of the selected window size, will not be detected reliably.

- flagLOF(field, n=20, thresh=1.5, algorithm='ball_tree', p=1, flag=255.0, **kwargs)#

Flag values where the Local Outlier Factor (LOF) exceeds cutoff.

- Parameters:

field (str | list[str]) – Variable to process.

n (

int(default:20)) –Number of neighbors to be included into the LOF calculation. Defaults to

20, which is a value found to be suitable in the literature.ndetermines the “locality” of an observation (itsnnearest neighbors) and sets the upper limit to the number of values in outlier clusters (i.e. consecutive outliers). Outlier clusters of size greater thann/2 may not be detected reliably.The larger